Groq, an AI chip company, has sent shockwaves through the technology landscape with its remarkable processing speed, challenging the supremacy of Nvidia, currently hailed as the “AI chip king.” In a recent demonstration, Groq’s performance has outpaced renowned models like ChatGPT, Gemini, and Elon Musk’s Grok, portraying them as sluggish in comparison.

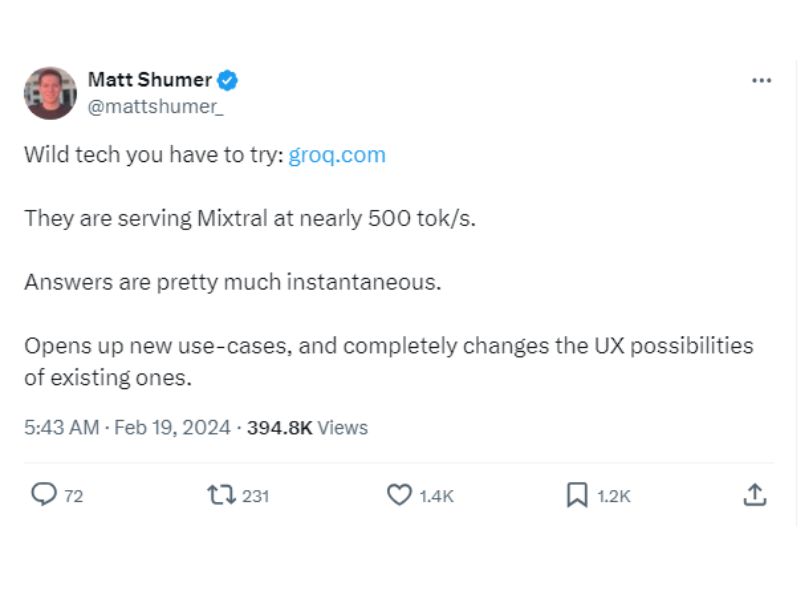

Groq’s Spectacular Performance: A recent showcase on the X platform has garnered substantial attention, revealing Groq’s unprecedented speed and efficiency. Within mere seconds, Groq generated hundreds of words for responses, accompanied by referenced sources. Another demonstration featured Groq’s CEO, Jonathon Ross, engaging in real-time conversation with a CNN host using an AI chatbot located halfway around the world, showcased on live television.

Groq vs. Nvidia’s GPUs: Groq’s breakthrough lies in the creation of Language Processing Units (LPUs), which the company claims surpass the speed of Nvidia’s Graphics Processing Units (GPUs). Nvidia’s GPUs are currently the sought-after processors globally for training and operating the surging AI models. Initial results indicate that Groq’s LPUs can outperform Nvidia’s GPUs in terms of speed.

Significance for AI Chatbots: The ability of Groq’s AI chip to rapidly process language has profound implications for AI chatbots like ChatGPT and Gemini. Current limitations of AI chatbots, such as the inability to match real-time human speech speed, could be addressed by Groq’s processors, making these chatbots more practical and responsive.

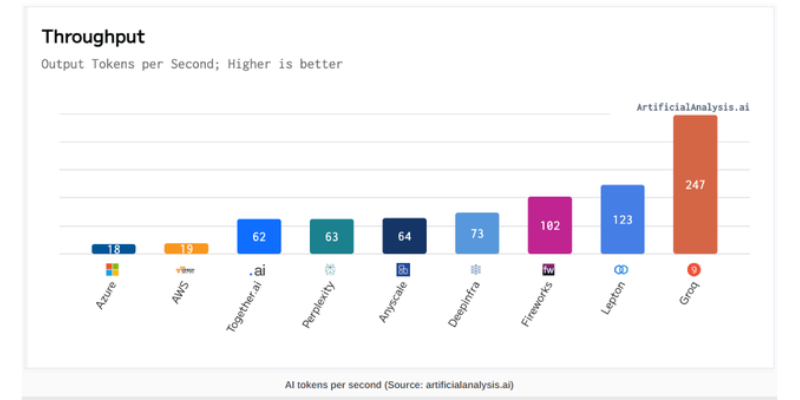

Benchmark Testing: In an independent test conducted by Artificial Analysis, Groq produced 247 tokens/second compared to Microsoft’s 18 tokens/second running on Nvidia GPUs. This suggests that ChatGPT could run more than 13 times faster using Groq’s chips. The substantial leap in processing speed could enhance the utility of AI chatbots in real-world scenarios.

Groq’s Founding and Chip Design: Before establishing Groq, Jonathon Ross co-founded Google’s AI chip division, responsible for developing advanced chips for AI model training. Groq’s LPUs address two key bottlenecks faced by Large Language Models (LLMs) using GPU and CPU: computational density and memory bandwidth.

Industry Response and Future Prospects: While Groq has gained significant attention for its exceptional speed, questions remain about the scalability of its AI chip compared to Nvidia’s GPUs or Google’s TPUs. Established players like Nvidia and Google have set industry standards due to their reputation and extensive experience. Although Groq has attracted public interest, it will likely take time to validate its commitments.

Global AI Chip Demand and OpenAI’s Initiatives: The increasing global demand for AI chips has prompted significant efforts, with OpenAI’s CEO, Sam Altman, seeking over $7 trillion to boost AI chip production worldwide. This initiative aims to reduce reliance on Nvidia and meet the burgeoning need for AI chips globally.

In conclusion, Groq’s breakthrough in AI chip technology has stirred considerable excitement, challenging the status quo and offering a potential alternative to established players in the AI chip market. The future trajectory of AI chip development will undoubtedly witness intense competition and advancements as the industry strives to meet the soaring demand for efficient and high-performance processors.

Hi there, just became alert to your blog through Google, and found that it is really informative.

I am gonna watch out for brussels. I will be grateful if you continue this in future.

Numerous people will be benefited from your writing.

Cheers! Escape roomy lista